Vision-Language-Action Foundation Models for General Embodied Intelligence

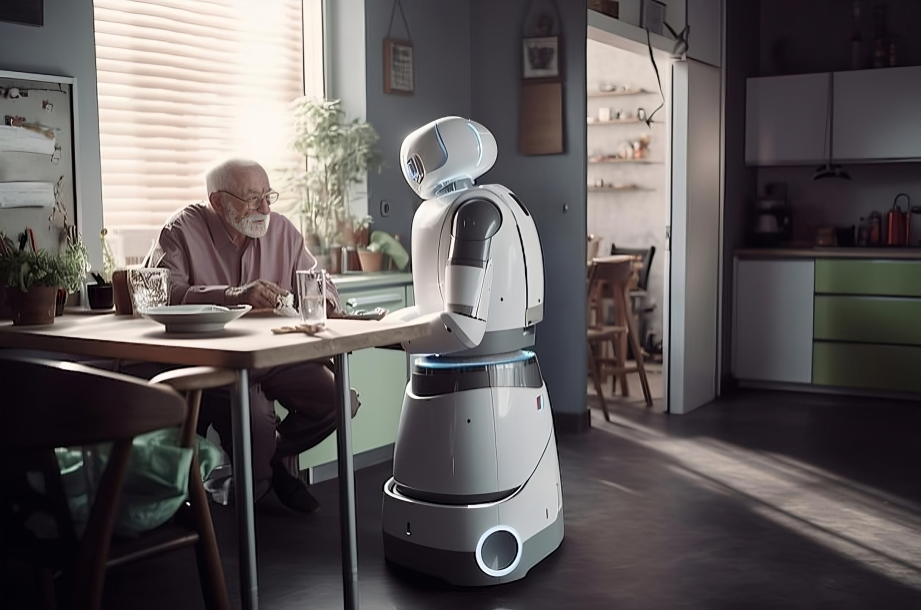

Embodied AI connects perception, language, and action to enable robots to perform meaningful tasks in the physical world. In robotic manipulation, this ranges from household chores and factory automation to overcoming obstacles like doors during navigation. In visual search, it spans locating everyday items such as keys or cooking ingredients, as well as broader applications in wildlife monitoring, healthcare, retail, and disaster response. By grounding multimodal representations in action, Embodied AI moves beyond passive recognition toward robots that can reason, interact, and adapt across diverse environments.

Despite rapid progress, current methods face key limitations. Specialist models, whether for manipulation or search, are typically overfitted to narrow tasks and environments, limiting their ability to generalize to new objects, targets, or contexts. Meanwhile, state-of-the-art vision-language models (VLMs), though powerful in semantic reasoning, remain largely ungrounded in the physical world: they require explicit human instructions, lack the ability to infer implicit physical goals, and often produce action outputs that are brittle, unsafe, or non-scalable. Together, these issues restrict robots’ ability to robustly handle the variability and complexity of long-horizon, real-world tasks.

To overcome these challenges, we propose leveraging Vision-Language-Action (VLA) models as a unified foundation for embodied tasks. VLAs couple perception and language with action generation, enabling robots to translate multimodal embeddings into actionable strategies for both manipulation and search. Future work will explore reinforcement learning as a way to enhance VLAs by grounding multimodal perception in real-world feedback, improving adaptation beyond static datasets, and strengthening long-horizon decision-making through better credit assignment. In parallel, deploying these models onto real robots and incorporating additional sensory modalities, such as audio and proprioception, will provide richer grounding and greater adaptability. Together, these directions will pave the way toward general-purpose robots capable of robust generalization and reliable execution of complex, multi-step tasks in diverse real-world settings.

Project reports from previous years:

- Search-TTA: A Multimodal Test-Time Adaptation Framework for Visual Search in the Wild

- Search-TTA HunggingFace Demo

- Context Mask Priors via Vision-Language Model for Ergodic Search

People

Guillaume SARTORETTI