Past/Current Research Projects

Vision-Language-Action Foundation Models for General Embodied Intelligence

Embodied AI uses Vision-Language-Action models to connect perception, language, and action, aiming to build general robots that can adapt, reason, and perform complex tasks in real-world environments.

Decentralized Collision-Free Multi-Agent Path Finding (MAPF)

Distributed reinforcement learning for scalable, decentralized multi-agent path finding in highly-structured environments (e.g., Amazon fulfillment centers)

Communication Learning for True Cooperation in Multi-Agent Systems

Communication learning for simultaneous communication and action policy learning.

Autonomous Robotic Exploration and Search in Complex Environments

Deployment of robots to conduct efficient exploration and search in complex environments

Multi-Agent Search/Surveillance of Adversarial/Evasive Agents

Multi-robot search and monitor an area to locate potentially evasive targets, by learning strategies in a mixed cooperative-competitive environment.

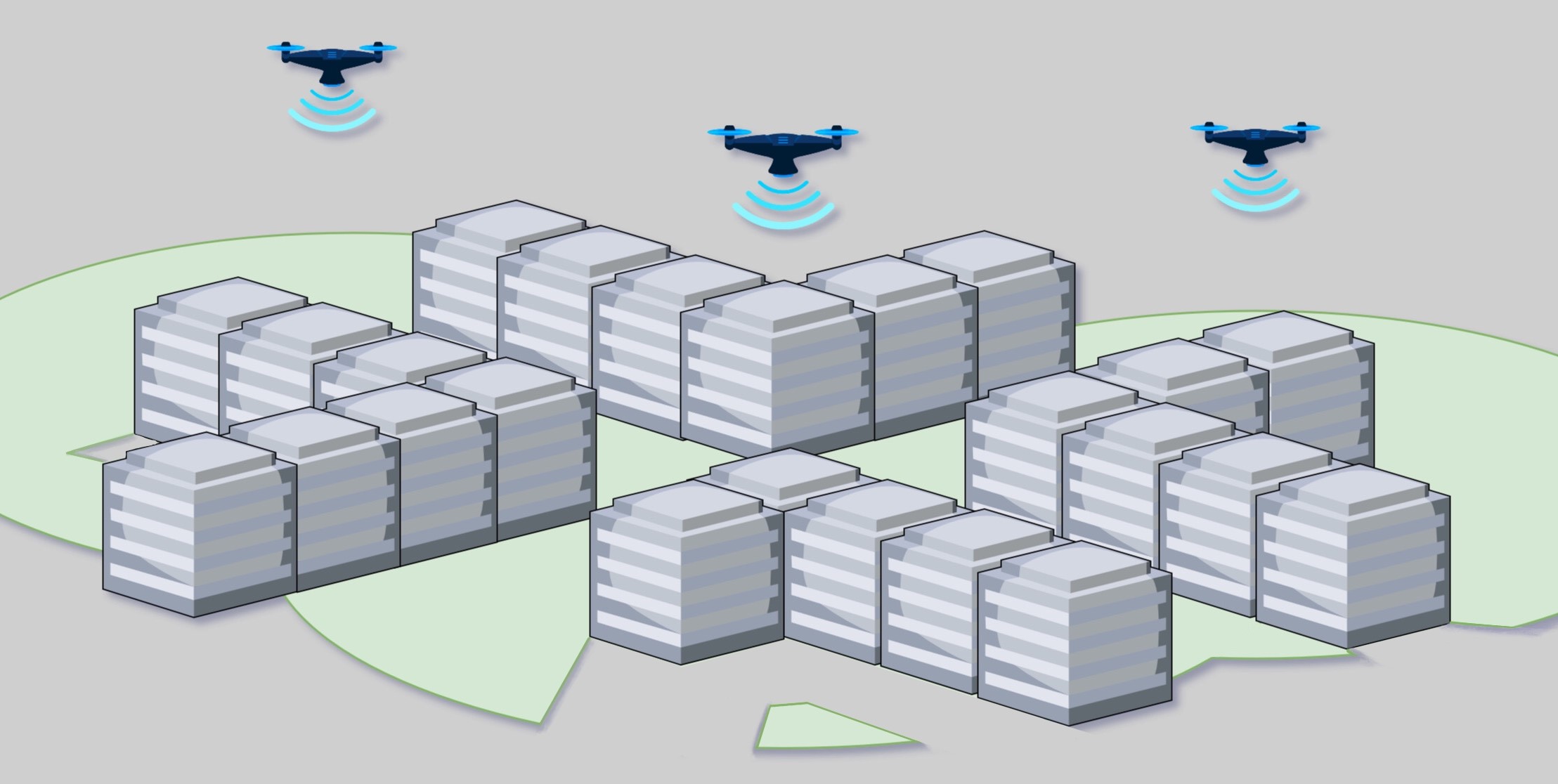

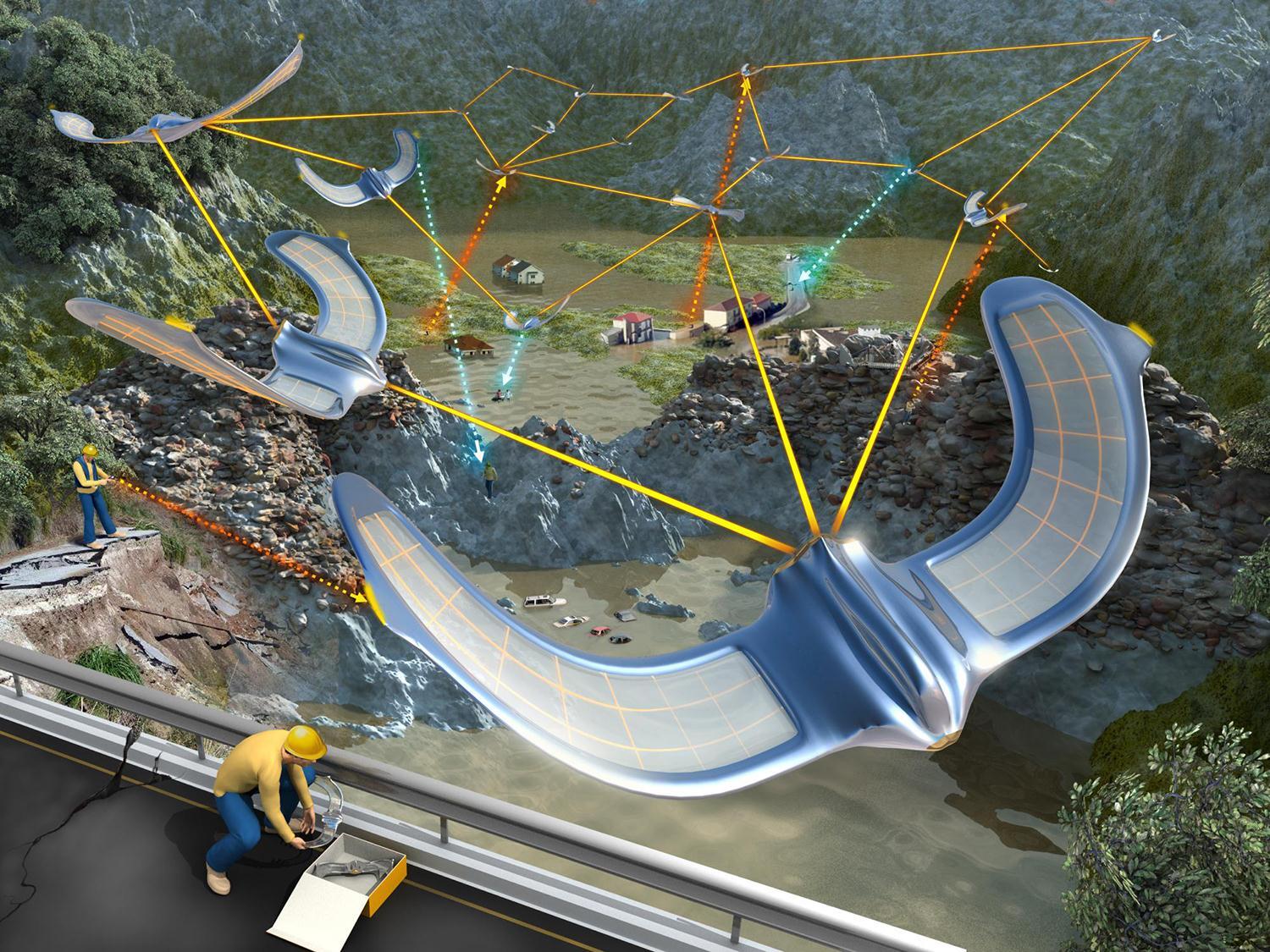

Communication-Aware Multi-Agent Exploration

Decentralised multi-robot exploration in communication-constraint environments, ensuring appropriate connectivity and adaptability in real-world conditions.

Distributed learning based Intelligent Transportation System

Distributed RL for junction-level traffic light phase control, as well as for decentralized CAVs control via communication learning.

Learning Multi-Agent Strategies

Extension of Model-based Skills Learning to the Multi-Agent Reinforcement Learning Setting.

Environmental Interactions by Autonomous (Legged, Aquatic) Robots

The project’s aim is to exploit this manipulative prowess in order to boost the performance of legged robots in both industrial and real-world situations.