Embodied Vision-Language Foundation Models for Visual Active Search

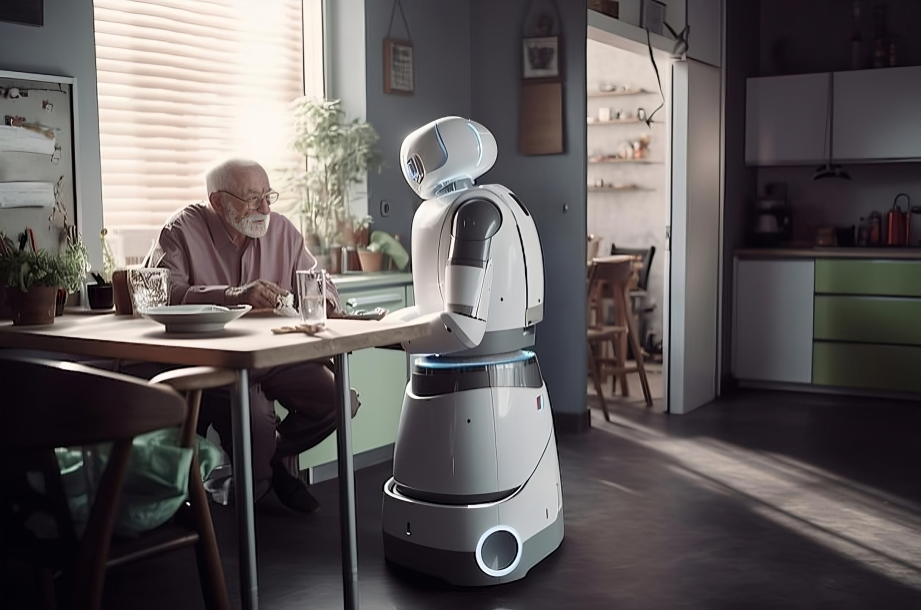

Visual active search utilizes visual semantics to locate objects of interest across diverse applications, such as healthcare, retail, search and rescue, and wildlife monitoring. By deploying multiple robots in a coordinated manner, search operations can cover larger areas more efficiently, enhancing the feasibility of real-world scenarios where rapid and precise object detection is essential.

However, existing methods face several limitations. Traditional feature extraction techniques are often hand-crafted or trained for specific targets, limiting their ability to generalize across new object classes. Large language models (LLMs), while excelling at natural language reasoning, are restricted to text and lack grounding in the physical world. Embodied vision-language models (VLMs), which integrate real-world sensor data like cameras feeds, still depend on explicit human instructions, restricting their ability to reason implicitly about search tasks (E.g. identifying which fruit in a platter contains the highest level of vitamin C, or identifying the areas on a satellite image where an endangered animal species can most likely be found).

To address these challenges, we propose the development of embodied VLMs capable of indirect reasoning for challenging search tasks. Since foundation models often struggle to directly generate free-form actions, we incorporate Reinforcement Learning to enable robots to translate the visual-text embeddings from VLMs into coordinated search strategies. Future work will focus on deploying these models onto real robots and incorporating additional sensory modalities, such as audio and proprioception, to extend our work on active search to different applications.

Relevant Papers:

- Search-TTA: A Multimodal Test-Time Adaptation Framework for Visual Search in the Wild

- Search-TTA HunggingFace Demo

- Context Mask Priors via Vision-Language Model for Ergodic Search

People

Guillaume SARTORETTI

Boyang LIU